MMDA toolkit

Exploring the “Fukushima Effect”

From CCDA to MMDA – Our Methodological Vision

We take the method of corpus-based critical discourse analysis (CCDA) as a starting point for a multi-disciplinary integration. Similar to CCDA, we operationalize a discourse as the pairing of a topic (e.g. nuclear energy) with certain attitudes or opinions towards this topic (e.g. a fear of nuclear disasters). We also make the fundamental assumption that both topics and attitudes can be characterized by suitable patterns of lexical items (words or fixed expressions such as slogans) – an assumption shared, amongst others, by CCDA, topic modelling and sentiment analysis.

Our methodology puts the human analyst at the center of a highly dynamic procedure that integrates state-of-the-art approaches from multiple disciplines, qualitative and quantitative perspectives, as well as human, semi-automatic and fully automatic analyses: The CCDA core of our procedure (entitled “MMDA” – Mixed Methods Discourse Analysis) makes use of corpus-linguistic methods developed and refined by our research group, including distributional similarity, multivariate analysis of correlational patterns and improved approaches to collocation analysis, as well as state-of-the-art techniques from natural language processing.

These methods are complemented by the theoretical background and insights of a human analyst rooted in the discipline of cultural studies. The human analyst ideally brings along expertise in both the discourse to be analyzed and the techniques of the tool, merging into a hermeneutic cyborg (cf. Stefan Evert’s Sinclair Lecture “The Hermeneutic Cyborg”).

Functionality

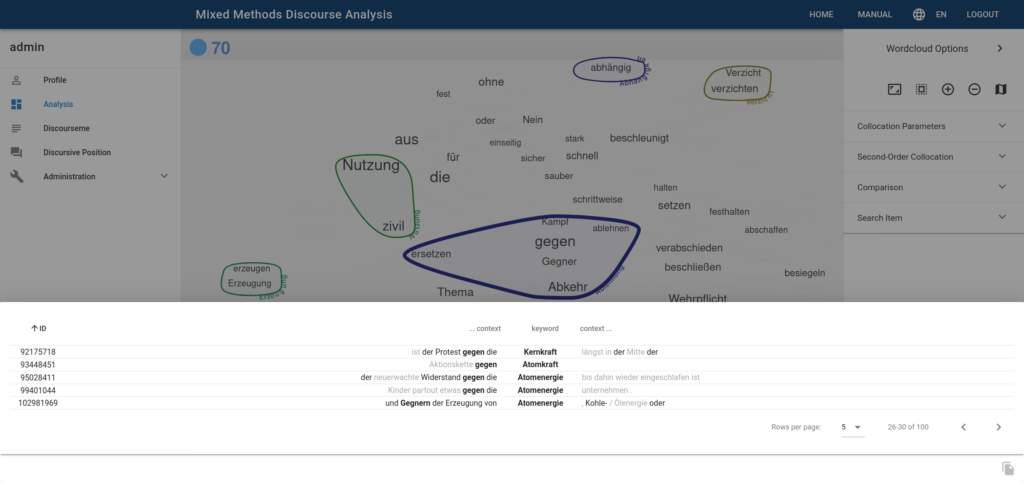

It is worth mentioning that although CCDA reduces research bias – since the researcher is confronted with empirical data – this triangulation comes with an intense work load. In the current state of affairs, the main functionalities implemented in the MMDA toolkit are supposed to ease the manual work of hermeneutic interpretation. Since a major task of interpretation involves categorizing collocates of a topic query into groups, the toolkit assists by visualizing the results of the collocation analysis in an appropriate manner. On that account, we project high-dimensional word vectors onto a two-dimensional space. See the following figure for an outcome of this process, which visualizes the collocates for nuclear energy (“Kernergie | Kernkraft | Atomkraft | Atomenergie | Nuklearenergie”) in a major German newspaper:

Semantically similar words are neighbours in the map, and the hermeneutic interpreter thus can rely on pre-grouped words to form categories. The size of the lexical items reflects their statistical association to the topic node (as measured by Log-Likelihood in this case). The toolkit supports interactive grouping of the collocates as well as a range of options in order to tweak the visualization, including a choice of different association measures and window sizes for the initial collocation analysis.

Semantically similar words are neighbours in the map, and the hermeneutic interpreter thus can rely on pre-grouped words to form categories. The size of the lexical items reflects their statistical association to the topic node (as measured by Log-Likelihood in this case). The toolkit supports interactive grouping of the collocates as well as a range of options in order to tweak the visualization, including a choice of different association measures and window sizes for the initial collocation analysis.

Further advantages of MMDA can be realized by interacting with the toolkit: Having grouped collocates together, the researcher can display concordances of discourseme constellation, i.e. collocates of the (topic, group)-pair, and start additional collocation analyses in order to triangulate the semantics of the discourse at hand.

Demo and Implementation Details

We have a demo of our toolkit up and running at https://geuselambix.phil.uni-erlangen.de/. Drop us a line if you want to have access.

The backend is implemented in Python using Flask and relies on the python module cwb-ccc (see PyPI or github), which builds upon the IMS Open Corpus Workbench; all low-level CWB calls are being abstracted using Python. This is mostly done using pandas dataframes and native python classes. All collocates extracted from a corpus are being processed using computational linguistic techniques such as Word2Vec, dimensionality reduction is done using t-distributed stochastic neighbour embedding (t-SNE). Finally, a standardized (HTTP) API is provided using Flask. This interface is used to abstract complex operations and deliver structured data to a frontend for visualisation.

The current (January 2021) frontend implementation uses Vue.js and Konva and is used for visualization and interaction with the data provided. A modern Material Design UI was created for this purpose, based on the open-source JavaScript framework Vue.js. A HTML5 2D Canvas library handles the visualization of the data.

Both frontend and backend are developed on gitlab.