Index

Past Projects

Past Projects

You can navigate to (a selection of) our past projects via the navigation bar on the left hand side.

PING

Prompt Higher Learning – Mit KI-gestützten Writing Tools (Hochschul-)Bildung verbessern?!

Das interdisziplinäre Projekt zielt darauf ab, Nutzungsszenarien von KI-gestützten Chatbots in Bildungseinrichtungen zu entwickeln und Bildungsangebote dazu zur Verfügung zu stellen. Dabei stehen Anwendungsmöglichkeiten für Lehr-Lernszenarien in Hochschulen, Schulen und anderen Bildungseinrichtungen im Vordergrund. Im Rahmen von Projektseminaren entwickeln und evaluieren Teilnehmende eigene Fallbeispiele.

Prompt Higher Learning – Mit KI-gestützten Writing Tools (Hochschul-)Bildung verbessern?!

(Third Party Funds Single)

Project leader:

Project members: , ,

Start date: 2024-04-01

End date: 2026-03-31

Acronym: Freiraum 2023

Funding source: Stiftungen

NormRechts

DFG-Projekt: Die Normalisierung rechtspopulistischer und neurechter Diskurse in Japan und Deutschland

(Third Party Funds Group – Overall project)

Project leader: ,

Project members: ,

Start date: 2022-04-01

End date: 2025-03-31

Acronym: NormRechts

Funding source: Deutsche Forschungsgemeinschaft (DFG)

Abstract:

Der Lehrstuhl Japanologie mit dem Schwerpunkt Japan derModerne und Gegenwart ist Teil des durch die DFG geförderten Projekts „Die Normalisierungrechtspopulistischer und neurechter Diskurse in Japan und Deutschland“ ,das interdisziplinär in Kooperation mit dem Lehrstuhl für Korpus- undComputerlinguistik durch die Philosophische Fakultät der FAU durchgeführt wird. In diesem vergleichend angelegten Forschungsprojekt wird ausdiskursanalytischer Perspektive verschiedene Instanzen des politischenPopulismus als „schlanke Ideologie“ (Mudde/Kaltwasser) in ihrer jeweiligenideologischen Nähe zu neurechten Diskursen in Japan und Deutschland.Insbesondere analysiert werden die langfristigen Auswirkungen neurechterdiskursiver Strategien und rechtspopulistischer Politik auf die Alltagsspracheund das politische Diskursfeld mit den Methoden der Korpus- undComputerlinguistik sowie der korpusbasierten kritischen Diskursanalyse.

Publications:

- , , , , , , , :

Automatic Identification of COVID-19-Related Conspiracy Narratives in German Telegram Channels and Chats

The 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) (Turin, 2024-05-20 - 2024-05-25)

In: Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, Nianwen Xue (ed.): Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) 2024

Open Access: https://aclanthology.org/2024.lrec-main.173

URL: https://aclanthology.org/2024.lrec-main.173 - , :

Russian State-controlled Propaganda and its Proxies: Pro-Russian Political Actors in Japan

In: Asia-Pacific Journal : Japan Focus 22 (2024)

ISSN: 1557-4660

Open Access: https://apjjf.org/wp-content/uploads/2024/04/Article_5836.pdf

URL: https://apjjf.org/2024/3/schafer-kalashnikova - (ed.):

The Online Ecosystem of the Japanese Far Right: Platforms, Actors, Organizations (Series Editor)

2024

(Asia-Pacific Journal: Japan Focus)

URL: https://apjjf.org/online-ecosystem - , :

Parroting the U.S. Far-Right: Former Fringe Party Politician and Conspiracy Entrepreneur Yukihisa Oikawa

In: Asia-Pacific Journal : Japan Focus 22 (2024), Article No.: 4

ISSN: 1557-4660

Open Access: https://apjjf.org/wp-content/uploads/2024/06/Article_5850.pdf

URL: https://apjjf.org/2024/6/havenstein-schafer - , , , , , , :

From Linguistic to Discursive Patterns: Introducing Discoursemes as a Basic Unit of Discourse Analysis

In: CADAAD Journal. Critical Approaches to Discourse Analysis across Disciplines 16 (2024), p. 87-111

ISSN: 1752-3079

DOI: 10.21827/cadaad.16.2.42457

Medien

- Interview zu Fake News bei der Bundestagswahl im Frankenfernsehen (04.02.2025)

LeAK & AnGer

Automatic Anonymisation and Pseudonymisation of Court Decisions

German courts are legally required to publish their verdict, but according to estimates, only a small amount (under 3%) of all court decisions are published per year. The transparency and availability of such documents do not only grant legal professionals and the general public access to crucial information source, but is also of importance for legal-tech industry and the digitalisation of government institutions. The main reason for this is the need of a time-consuming and yet manually anonymisation process. Also, to the best of our knowledge there is no high-quality German corpus available to train a fully automatic model and most existing tools are still semi-automatic systems. Hence, they only provide a support for the manually anonymisation process. In LeAK and AnGer, we want to fill these gaps by working on the creation of high-quality annotated training data using verdicts from different law domains and developing a prototype for a fully automatic anonymisation.

AnGer

- Start date: 01.01.2023

- End date: 31.12.2025

- Funding source: Bundesministerium für Billdung und Forschung (BMBF)

Motivated by the promising results from our prior works in LeAK, the main objectives of the AnGer project lie in the further development of our automatic anonymisation system and the creation of a new high-quality annotated dataset consisting of court decisions from higher regional court (Oberlandesgericht). Moreover, the models generalisation across different law domains is still a challenging task which should be addressed in this project. We are working on different data augmentation techniques, as well as robust neural networks which help to enhance the robustness of our current prototype. Especially, data augmentation can also help to generate more training samples for domains that lack of training data. Further, according to our findings in LeAK, domain adaptation is still required in certain domains. Thus, we create learning curves for AG and OLG datasets to visualise the learning quality during each training step and to analyse the amount of data needed for a robust domain adaption. We also work on an approach for continuous domain adaptation without retraining using all data. Finally, we want to carry out legal-tech studies and conduct de-anonymisation experiments with the annotated gold standard.

LeaK

- Start date: 01.04.2020

- End date: 31.03.2022

- Funding source: Bayerisches Staatsministerium der Justiz (StMJ)

This project aims at exploring the feasibility of a fully automatic anonymisation system for German court decision. One of our key contributions is the development of a high-quality manually annotated gold standard for verdicts from regional districts (Amtsgericht). To ensure the absence of privacy-related information, at least six people have to work on the same document. Therefore, each text in our dataset is annotated independently by four student annotators, who have to identify text spans that need to be anonymised, information categories (names, addresses, jobs or dates) as well as risk levels (high, middle and low) and adjudicated by two new annotators in the subsequent step. Text anonymisation is thus approached as Named Entity Recognition (NER). Another essential aspect of the project is the systematic evaluation of different automatic approaches for automatic anonymisation using existing NER taggers, as well as fine-tuning several Large Language Models (LLMs) on our gold standard. Especially, we are also working on a multitask architecture in order to enhance the robustness of the anonymisation system. In the course of these experiments, the transferability of our prototype should also be validated across documents from other law domains of higher regional court (Oberlandesgericht) . Preliminary results of this experiment indicate the need of domain adaption in order to achieve good performance on court decisions from other text domains.

RC21

Reading concordances in the 21st century (RC21)

Joint project of FAU Erlangen-Nürnberg and the University of Birmingham

Project leaders: Stephanie Evert, Michaela Mahlberg

Project members: Alexander Piperski, Nathan Dykes

Start date: 2023-02-23

End date: 2026-03-31

Acronym: RC21

Funding source: Deutsche Forschungsgemeinschaft (project no. 508235423), Arts and Humanities Research Council (project no. AH/X002047/1)

Abstract:

In today’s digital world, the amount of text communicated in electronic form is ever-increasing and there is a growing need for approaches and methods to extract meanings from texts at scale. Corpus linguists have long been studying digitised texts and have established that much of language is characterised by recurring patterns. So the word ‘eye’ can appear together with words like ‘cream’ and ‘test’, or words like ‘closed’ and ‘fixed’. In corpus linguistics, such patterns are identified with the help of concordances, i.e. displays that show many occurrences of a word, phrase or construction across a range of contexts in a compact format. However, lacking a well-established and clear-cut methodology, the art of reading concordances has not yet realised its full potential. At the same time, there has been very little innovation in algorithms in the concordance software packages available to corpus linguists.This project proposes an innovative approach to reading concordances in the 21st century. Through the collaboration between the University of Birmingham and FAU Erlangen-Nürnberg we combine strengths in theoretical work in corpus linguistics with expertise in computational algorithms in order to develop a systematic methodology for reading concordances. We will develop tool-independent strategies and corresponding algorithms for the semi-automatic organisation of concordance lines, and implement them in the software FlexiConc. To develop and test our approach, we will conduct two case studies. The first will focus on body language in fiction compared to non-fiction texts. The second will focus on political argumentation in social media, formalising its findings as corpus queries that can be used for automatic argumentation mining. Both case studies include a comparative dimension between English and German. Hence, they broaden out approaches to concordance reading which have been very focused on the English language so far. Through these case studies, we will establish an approach that not only provides innovation in corpus linguistics, but also has wider implications for the analysis of textual data at scale, while still retaining a humanities perspective.We will develop FlexiConc as open-source software, so that other researchers can use it as an off-the-shelf tool or integrate it into existing concordance tools or their own software environment. Both FlexiConc and our tool-independent approach to concordance analysis will have relevance beyond corpus linguistics, providing innovative approaches and algorithms for disciplines such as digital humanities and computational social science. We will raise awareness of the new possibilities in a variety of forms, for instance, through a project blog where users of our software can share their experience, and with the help of an advisory board of leading international experts. We will run training sessions at summer schools and conferences and make educational materials available online.

Conference presentations:

Stephanie Evert, Natalie Finlayson, Michaela Mahlberg, and Alexander Piperski. Computer-assisted concordance reading. Corpus Linguistics 2023 Conference (03–06 July 2023, Lancaster)

Tracking the infodemic

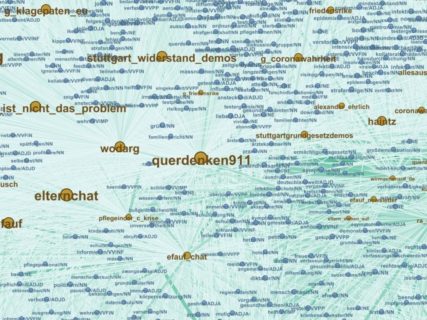

Tracking the infodemic: Conspiracy theories in the corona crisis

(Third Party Funds Single)

Project leader: ,

Project members: , , , ,

Start date: 2021-04-01

End date: 2022-09-30

Funding source: Volkswagen Stiftung

Abstract:

With insufficient scientific knowledge about the new Coronavirus and public controversies about the effectiveness and diversity of implemented countermeasures, the ongoing pandemic has triggered great insecurity and lack of information, which has prepared fertile soil for the spread of conspiracy theories. In our research, we apply innovative corpus-linguistic methods to analyze the use and distribution of typical linguistic patterns of conspiracy theories and study the discursive strategies they share with right-wing populist and extremist discourses. Besides providing important insights regarding the current situation, our goal is to operationalize and automate our methods so that they can be applied to the spread of conspiracy theories and misinformation in the future.

Publications:

- , , , , , , , :

Automatic Identification of COVID-19-Related Conspiracy Narratives in German Telegram Channels and Chats

The 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) (Turin, 2024-05-20 – 2024-05-25)

In: Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, Nianwen Xue (ed.): Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) 2024

Open Access: https://aclanthology.org/2024.lrec-main.173

URL: https://aclanthology.org/2024.lrec-main.173

RANT & RAND

Reconstructing Arguments from Newsworthy Debates

(Third Party Funds Single)

Project leader:

Project members: , , ,

Start date: 2021-01-01

End date: 2023-12-31

Acronym: RAND

Funding source: DFG / Schwerpunktprogramm (SPP)

URL: https://www.linguistik.phil.fau.de/projects/rant/

Abstract:

Large portions of ongoing political debates are available in machine- readable form nowadays, ranging from the formal public sphere of parliamentary proceedings to the semi-public sphere of social media. This offers new opportunities for gaining a comprehensive overview of the arguments exchanged, using automated techniques to analyse text sources. The goal of the RANT/RAND project series within the priority programme RATIO (Robust Argumentation Machines) is to contribute to the automated extraction of arguments and argument structures from machine-readable texts via an approach that combines logical and corpus-linguistic methods and favours precision over recall, on the assumption that the sheer volume of available data will allow us to pinpoint prevalent arguments even under moderate recall. Specifically, we identify logical patterns corresponding to individual argument schemes taken from standard classifications, such as argument from expert opinion; essentially, these logical patterns are formulae with placeholders in dedicated modal logics. To each logical pattern we associate several linguistic patterns corresponding to different realisations of the formula in natural language; these patterns are developed and refined through corpus- linguistic studies and formalised in terms of corpus queries. Our approach thus integrates the development of automated argument extraction methods with work towards a better understanding of the linguistic aspects of everyday political argumentation. Research in the ongoing first project phase is focused on designing and evaluating patterns and queries for individual arguments, with a large corpus of English Twitter messages used as a running case study. In the second project phase, we plan to test the robustness of our approach by branching out into additional text types, in particular longer coherent texts such as newspaper articles and parliamentary debates, as well as by moving to German texts, which present additional challenges for the design of linguistic patterns (i.a. due to long- distance dependencies and limited availability of high-quality NLP tools). Crucially, we will also introduce similarity-based methods to enable complex reasoning on extracted arguments, representing the fillers in extracted formulae by specially tailored neural phrase embeddings. Moreover, we will extend the overall approach to allow for the high-precision extraction of argument structure, including explicit and implicit references to other arguments. We will combine these efforts with more specific investigations into the logical structure of arguments on how to achieve certain goals and into the interconnection between argumentation and interpersonal relationships, e.g. in ad-hominem arguments.

Publications:

- , , , , :

Argumentation Schemes for Blockchain Deanonymization

Sixteenth International Workshop on Juris-informatics (JURISIN 2022) (Kyoto International Conference Center, Kyoto, Japan, 2022-06-13 - 2022-06-14) - , :

Common Knowledge of Abstract Groups

Thirty-Seventh AAAI Conference on Artificial Intelligence Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence Thirteenth Symposium on Educational Advances in Artificial Intelligence (Washington DC, 2023-02-07 - 2023-02-14)

DOI: 10.1609/aaai.v37i5.25791 - , :

A Formal Treatment of Expressiveness and Relevance of Digital Evidence

In: Digital Threats: Research and Practice (2023)

ISSN: 2576-5337

DOI: 10.1145/3608485 - , , , , :

Leveraging High-Precision Corpus Queries for Text Classification via Large Language Models

First Workshop on Language-driven Deliberation Technology (DELITE) @ LREC-COLING 2024 (Torino, Italy, 2024-05-20 - 2024-05-20)

In: Hautli-Janisz A, Lapesa G, Anastasiou L, Gold V, Liddo AD, Reed C (ed.): Proceedings of the First Workshop on Language-driven Deliberation Technology (DELITE) @ LREC-COLING 2024, Torino, Italy: 2024

URL: https://aclanthology.org/2024.delite-1.7 - , , , , :

Argument parsing via corpus queries

In: it - Information Technology 63 (2021), p. 31-44

ISSN: 1611-2776

DOI: 10.1515/itit-2020-0051

Reconstructing Arguments from Noisy Text (DFG Priority Programme 1999: RATIO)

(Third Party Funds Single)

Project leader:

Project members: , , ,

Start date: 2018-01-01

End date: 2020-12-31

Acronym: RANT

Funding source: Deutsche Forschungsgemeinschaft (DFG)

Abstract:

Social media are of increasing importance in current public discourse. In RANT, we aim to contribute methods and formalisms for the extraction, representation, and processing of arguments from noisy text found in discussions on social media, using a large corpus of pre-referendum Twitter messages on Brexit as a running case study. We will conduct a corpus-linguistic study to identify recurring linguistic argumentation patterns and design corresponding corpus queries to extract arguments from the corpus, following a high-precision/low-recall approach. In fact, we expect to be able to associate argumentation patterns directly with logical patterns in a dedicated formalism and accordingly parse individual arguments directly as logical formulas. The logical formalism for argument representation will feature a broad range of modalities capturing real-life modes of expression such as uncertainty, agency, preference, sentiment, vagueness, and defaults. We will cast this formalism as a family of instance logics in the generic logical framework of coalgebraic logic, which provides uniform semantic, deductive and algorithmic methods for modalities beyond the standard relational setup; in particular, reasoning support for the logics in question will be based on further development of an existing generic coalgebraic reasoner. The argument representation formalism will be complemented by a flexible framework for the representation of relationships between arguments. These will include standard relations such as Dung's attack relation or a support relation but also relations extracted from metadata such as citation, hashtags, or direct address (via mention of user names), as well as relationships that are inferred from the logical content of individual arguments. The latter may take on a non-relational nature, involving, e.g., fuzzy truth values, preference orderings, or probabilities, and will thus fruitfully be modelled in the uniform framework of coalgebra that has already appeared above as the semantic foundation of coalgebraic logic. We will develop suitable generalizations of Dung's extension semantics for argumentation frameworks, thus capturing notions such as “coherent point of view” or “pervasive opinion”; in combination with corresponding algorithmic methods, these will allow for the automated extraction of large-scale argumentative positions from the corpus.

Publications:

- , , , , :

Reconstructing Arguments from Noisy Text

In: Datenbank-Spektrum 20 (2020), p. 123-129

ISSN: 1618-2162

DOI: 10.1007/s13222-020-00342-y

URL: https://link.springer.com/article/10.1007/s13222-020-00342-y - , , , , , :

Combining Machine Learning and Semantic Features in the Classification of Corporate Disclosures

Logic and Algorithms in Computational Linguistics 2017 (LACompLing2017) (Stockholm, 2017-08-16 - 2017-08-19)

In: Loukanova R, Liefke K (ed.): Proceedings of the Workshop on Logic and Algorithms in Computational Linguistics 2017 (LACompLing2017), Stockholm: 2017

URL: http://su.diva-portal.org/smash/get/diva2:1140018/FULLTEXT03.pdf - , , :

The Alternating-Time μ-Calculus with Disjunctive Explicit Strategies

29th EACSL Annual Conference on Computer Science Logic (CSL 2021) (University of Ljubljana, 2021-01-25 - 2021-01-28)

In: Christel Baier and Jean Goubault-Larrecq (ed.): Leibniz International Proceedings in Informatics (LIPIcs), Dagstuhl, Germany: 2021

DOI: 10.4230/LIPIcs.CSL.2021.26 - , :

Reconstructing argumentation patterns in German newspaper articles on multidrug-resistant pathogens: a multi-measure keyword approach

In: Journal of Corpora and Discourse Studies (2020), p. 51-74

ISSN: 2515-0251

DOI: 10.18573/jcads.35

URL: https://jcads.cardiffuniversitypress.org/articles/abstract/35/ - , , :

Reconstructing Twitter arguments with corpus linguistics

ICAME40: Language in Time, Time in Language (Neuchâtel, 2019-06-01 - 2019-06-05)

EFE

Attitudes and opinions towards nuclear power and renewable energy and the emergence of a transnational algorithmic public sphere

Attitudes and opinions towards nuclear power and renewable energy and the emergence of a transnational algorithmic public sphere

Abstract

The digitalization of society and media systems has had a major impact on (political) discourses and the formation of public opinion. The purpose of this project is to investigate the transnational algorithmic public sphere, a complex phenomenon that has arisen in an era of globalized mass media and social media connectivity across national borders. An interdisciplinary combination of computational linguistics, network visualization, intercultural hermeneutics and communication science enables us to analyze and map the processes underlying this phenomenon. The project addresses the current political discussion on nuclear power and renewable energy in Germany and Japan following the Fukushima accident.

Recent Activities

- 2018-09-17: Further qualitative results using our developed methodology have been presented at the Fourth Asia Pacific Corpus Linguistics Conference hosted in Takamatsu, Japan. See the slides to our conference contribution “Extending Corpus-Based Discourse Analysis for Exploring Japanese Social Media”.

- 2018-07-17: MMDA alpha – the first operational version of our toolkit for interactive discourse analysis – is running on a virtual machine hosted at FAU. If you want to be a beta-tester, please drop us a line.

- 2018-07-06: The Asahi Shimbun conducted an interview with Fabian Schäfer about our research concerning social bots in the 2014 Japanese General Election.

- 2018-06-25: Stefan Evert gave the 2018 Sinclair Lecture “The Hermeneutic Cyborg” at University of Birmingham, featuring a short demo of the MMDA toolkit.

- 2018-05-08: We presented comprehensive qualitative results analyzing the Fukushima Effect by means of MMDA at the Workshop on Computational Impact Detection from Text Data at the Language Resources and Evaluation Conference in Miyazaki, Japan. See the book of proceedings for our paper “A Transnational Analysis of News and Tweets about Nuclear Phase-Out in the Aftermath of the Fukushima Incident.”

KALLIMACHOS

Corpus-linguistic approaches and statistical methodology in the KALLIMACHOS Centre for Digital Humanities

see website of the main project

Project leader:

Project members:

Contributing FAU Organisations:

Project Details:

|

Funding source: BMBF / Verbundprojekt

|

Research Fields:

Abstract (technical / expert description):

This part of the project aims to provide a deeper understanding of the mathematical properties of methods for literary authorship attribution based on stylometric distance measures. Another focus is on the separation of author signal, genre signal and epoch signal which could be beneficial for an automatic genre classification. In addition, reliable statistical methods for testing the significance of the results need to be developed.

Overall project details:

External Partners:

Julius-Maximilians-Universität Würzburg

Publications:

|